#Amazon Redshift Data Visualization

Explore tagged Tumblr posts

Text

Guide to Amazon Redshift Data Visualization: Unlocking Insights

Amazon Redshift, a viable data warehousing arrangement, has been made as an establishment for storing and analyzing endless sums of information. In any case, the true potential of Redshift is opened when coupled with effective data visualization strategies. In this blog, we jump into the world of Amazon Redshift data visualization, exploring its significance, best practices, and devices to handle its full potential.

Understanding Amazon Redshift Data Visualization

Amazon Redshift, a fully managed data distribution center benefit within the cloud, offers high performance, versatility, and cost-effectiveness. It enables businesses to analyze enormous datasets efficiently. In any case, raw data alone is frequently overwhelming and challenging to translate. Amazon Redshift Data Visualization is useful in this situation.

Data visualization is the graphical representation of data and information. It streamlines complex datasets, making plans, patterns, and experiences more accessible and understandable. By visualizing information stored in Amazon Redshift, organizations can reveal valuable insights, make informed decisions, and drive business victory.

Key Features and Benefits

1. Scalability

Amazon Redshift consistently scales to accommodate growing datasets, guaranteeing ideal performance even as information volumes increase.

2. Speed

With Amazon Redshift's lightning-fast inquiry execution, clients can produce visualizations in real time, empowering fast decision-making.

3. Integration

Amazon Redshift offers adaptability and workflow compatibility by reliably integrating with well-known business intelligence products such as Tableau, Looker, and Power BI.

4. Security

Built-in security highlights such as encryption and access controls ensure that sensitive data remains protected at all times.

5. Cost-Effectiveness

Amazon Redshift's pay-as-you-go pricing show implies clients only pay for the assets they consume, minimizing overhead costs.

The Importance of Data Visualization with Amazon Redshift

· Enhanced Decision Making

Visual representations of information empower partners to grasp complex concepts quickly and make data-driven choices with certainty.

· Identification of Trends and Patterns

Visualization tools help identify patterns, designs, and outliers in data, encouraging proactive methodologies and risk mitigation.

· Improved Communication

Visualizations give a common language for communicating insights across divisions, improving collaboration and arrangement inside organizations.

· Real-Time Monitoring

With real-time visualization dashboards, organizations can screen key measurements and execution markers, empowering convenient interventions and optimizations.

Best Practices for Amazon Redshift Data Visualization

1) Understand Your Audience

Whether your audience is composed of operational workers, investigators, or executives, design visualizations to suit their requirements and preferences.

2) Choose the right Visualization Type

Select visualization types (e.g., bar charts, line graphs, and heatmaps) that successfully communicate the basic data insights and relationships.

3) Ensure Data Accuracy and Consistency

Approve and cleanse data before visualization to maintain accuracy and consistency, minimizing mistakes and misinterpretations.

4) Optimize Performance

Optimize queries and data recovery forms to guarantee quick and efficient rendering of visualizations, particularly with expansive datasets stored in Amazon Redshift.

5) Emphasize Interactivity

Incorporate intuitive highlights into visualizations, such as drill-down capabilities and channels, enabling clients to investigate information powerfully and extract more profound insights.

6) Design for Accessibility

Ensure visualizations are open to all clients, including those with disabilities, by adhering to accessibility measures and giving elective content descriptions.

Tools for Amazon Redshift Data Visualization

· Amazon QuickSight

Amazon's fully managed business intelligence service integrates consistently with Amazon Redshift, offering natural dashboards, ad hoc analysis, and machine learning-powered insights.

· Tableau

A driving information visualization stage, Scene interfaces to Amazon Redshift to make intelligently and shareable dashboards, empowering clients to investigate information easily.

· Power BI

Microsoft's Power BI suite provides strong data visualization capabilities, permitting clients to connect directly to Amazon Redshift and transform raw information into compelling visual stories.

· Looker

Looker, now part of Google Cloud, offers an information investigation and visualization platform that interfaces specifically with Amazon Redshift, empowering organizations to construct customized data experiences.

· Metabase

An open-source business intelligence tool, Metabase interfaces with Amazon Redshift, giving a simple yet powerful solution for making and sharing visualizations within organizations.

Conclusion

In conclusion, Amazon Redshift data visualization is a transformative capability that engages organizations to open the full potential of their information. By leveraging visualization best practices and tools custom-made for Amazon Redshift, businesses can gain more profound experiences, drive informed decision-making, and remain ahead in today's competitive scene. Grasp the control of visualization with Amazon Redshift, and set out on a journey of data-driven advancement and victory.

Frequently Asked Questions

Q1: How does Amazon Redshift Data Visualization work?

Ans: To extract data for visualization, Amazon Redshift Data Visualization must first interface with your Redshift cluster. Customers can then create charts, graphs, and dashboards that are visually appealing and informatively depict their data by using certain visualization tools and techniques.

Q2: What types of visualizations can I create with Amazon Redshift Data Visualization?

Ans: Amazon Redshift Data Visualization supports a wide run of visualization sorts, including bar charts, line graphs, and diffuse plots, pie charts, heatmaps, and more. Clients can select the visualization type that best suits their information and investigation needs.

Q3: Can I integrate Amazon Redshift Data Visualization with other BI tools?Ans: Yes, Amazon Redshift Information Visualization seamlessly coordinates with popular business intelligence (BI) devices such as Tableau, Looker, Power BI, and more. This integration permits clients to use their favored BI tools while still benefiting from the powerful data processing capabilities of Amazon Redshift.

0 notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering�� Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

4 notes

·

View notes

Text

What’s the function of Tableau Prep?

Tableau Prep is a data preparation tool from Tableau that helps users clean, shape, and organize data before it is analyzed or visualized. It is especially useful for data analysts and business intelligence professionals who need to prepare data quickly and efficiently without writing complex code.

The core function of Tableau Prep is to simplify the data preparation process through an intuitive, visual interface. Users can drag and drop datasets, apply filters, rename fields, split or combine columns, handle null values, pivot data, and even join or union multiple data sources. These actions are displayed in a clear, step-by-step workflow, which makes it easy to understand how data is transformed at each stage.

Tableau Prep includes two main components: Prep Builder, used to create and edit data preparation workflows, and Prep Conductor, which automates the running of flows and integrates with Tableau Server or Tableau Cloud for scheduled data refreshes. This automation is a major advantage, especially in dynamic environments where data updates regularly.

Another significant benefit is real-time previews. As users manipulate data, they can instantly see the effects of their actions, allowing for better decisions and error checking. It supports connections to various data sources such as Excel, SQL databases, and cloud platforms like Google BigQuery or Amazon Redshift.

Tableau Prep’s seamless integration with Tableau Desktop means that once data is prepped, it can be directly pushed into visualization dashboards without exporting and re-importing files.

In short, Tableau Prep helps streamline the otherwise time-consuming process of cleaning and preparing data, making it more accessible to analysts without deep programming knowledge.

If you’re looking to master tools like Tableau Prep and enter the analytics field, consider enrolling in a data analyst course with placement for hands-on training and career support.

0 notes

Text

Introduction to AWS Data Engineering: Key Services and Use Cases

Introduction

Business operations today generate huge datasets which need significant amounts of processing during each operation. Data handling efficiency is essential for organization decision making and expansion initiatives. Through its cloud solutions known as Amazon Web Services (AWS) organizations gain multiple data-handling platforms which construct protected and scalable data pipelines at affordable rates. AWS data engineering solutions enable organizations to both acquire and store data and perform analytical tasks and machine learning operations. A suite of services allows business implementation of operational workflows while organizations reduce costs and boost operational efficiency and maintain both security measures and regulatory compliance. The article presents basic details about AWS data engineering solutions through their practical applications and actual business scenarios.

What is AWS Data Engineering?

AWS data engineering involves designing, building, and maintaining data pipelines using AWS services. It includes:

Data Ingestion: Collecting data from sources such as IoT devices, databases, and logs.

Data Storage: Storing structured and unstructured data in a scalable, cost-effective manner.

Data Processing: Transforming and preparing data for analysis.

Data Analytics: Gaining insights from processed data through reporting and visualization tools.

Machine Learning: Using AI-driven models to generate predictions and automate decision-making.

With AWS, organizations can streamline these processes, ensuring high availability, scalability, and flexibility in managing large datasets.

Key AWS Data Engineering Services

AWS provides a comprehensive range of services tailored to different aspects of data engineering.

Amazon S3 (Simple Storage Service) – Data Storage

Amazon S3 is a scalable object storage service that allows organizations to store structured and unstructured data. It is highly durable, offers lifecycle management features, and integrates seamlessly with AWS analytics and machine learning services.

Supports unlimited storage capacity for structured and unstructured data.

Allows lifecycle policies for cost optimization through tiered storage.

Provides strong integration with analytics and big data processing tools.

Use Case: Companies use Amazon S3 to store raw log files, multimedia content, and IoT data before processing.

AWS Glue – Data ETL (Extract, Transform, Load)

AWS Glue is a fully managed ETL service that simplifies data preparation and movement across different storage solutions. It enables users to clean, catalog, and transform data automatically.

Supports automatic schema discovery and metadata management.

Offers a serverless environment for running ETL jobs.

Uses Python and Spark-based transformations for scalable data processing.

Use Case: AWS Glue is widely used to transform raw data before loading it into data warehouses like Amazon Redshift.

Amazon Redshift – Data Warehousing and Analytics

Amazon Redshift is a cloud data warehouse optimized for large-scale data analysis. It enables organizations to perform complex queries on structured datasets quickly.

Uses columnar storage for high-performance querying.

Supports Massively Parallel Processing (MPP) for handling big data workloads.

It integrates with business intelligence tools like Amazon QuickSight.

Use Case: E-commerce companies use Amazon Redshift for customer behavior analysis and sales trend forecasting.

Amazon Kinesis – Real-Time Data Streaming

Amazon Kinesis allows organizations to ingest, process, and analyze streaming data in real-time. It is useful for applications that require continuous monitoring and real-time decision-making.

Supports high-throughput data ingestion from logs, clickstreams, and IoT devices.

Works with AWS Lambda, Amazon Redshift, and Amazon Elasticsearch for analytics.

Enables real-time anomaly detection and monitoring.

Use Case: Financial institutions use Kinesis to detect fraudulent transactions in real-time.

AWS Lambda – Serverless Data Processing

AWS Lambda enables event-driven serverless computing. It allows users to execute code in response to triggers without provisioning or managing servers.

Executes code automatically in response to AWS events.

Supports seamless integration with S3, DynamoDB, and Kinesis.

Charges only for the compute time used.

Use Case: Lambda is commonly used for processing image uploads and extracting metadata automatically.

Amazon DynamoDB – NoSQL Database for Fast Applications

Amazon DynamoDB is a managed NoSQL database that delivers high performance for applications that require real-time data access.

Provides single-digit millisecond latency for high-speed transactions.

Offers built-in security, backup, and multi-region replication.

Scales automatically to handle millions of requests per second.

Use Case: Gaming companies use DynamoDB to store real-time player progress and game states.

Amazon Athena – Serverless SQL Analytics

Amazon Athena is a serverless query service that allows users to analyze data stored in Amazon S3 using SQL.

Eliminates the need for infrastructure setup and maintenance.

Uses Presto and Hive for high-performance querying.

Charges only for the amount of data scanned.

Use Case: Organizations use Athena to analyze and generate reports from large log files stored in S3.

AWS Data Engineering Use Cases

AWS data engineering services cater to a variety of industries and applications.

Healthcare: Storing and processing patient data for predictive analytics.

Finance: Real-time fraud detection and compliance reporting.

Retail: Personalizing product recommendations using machine learning models.

IoT and Smart Cities: Managing and analyzing data from connected devices.

Media and Entertainment: Streaming analytics for audience engagement insights.

These services empower businesses to build efficient, scalable, and secure data pipelines while reducing operational costs.

Conclusion

AWS provides a comprehensive ecosystem of data engineering tools that streamline data ingestion, storage, transformation, analytics, and machine learning. Services like Amazon S3, AWS Glue, Redshift, Kinesis, and Lambda allow businesses to build scalable, cost-effective, and high-performance data pipelines.

Selecting the right AWS services depends on the specific needs of an organization. For those looking to store vast amounts of unstructured data, Amazon S3 is an ideal choice. Companies needing high-speed data processing can benefit from AWS Glue and Redshift. Real-time data streaming can be efficiently managed with Kinesis. Meanwhile, AWS Lambda simplifies event-driven processing without requiring infrastructure management.

Understanding these AWS data engineering services allows businesses to build modern, cloud-based data architectures that enhance efficiency, security, and performance.

References

For further reading, refer to these sources:

AWS Prescriptive Guidance on Data Engineering

AWS Big Data Use Cases

Key AWS Services for Data Engineering Projects

Top 10 AWS Services for Data Engineering

AWS Data Engineering Essentials Guidebook

AWS Data Engineering Guide: Everything You Need to Know

Exploring Data Engineering Services in AWS

By leveraging AWS data engineering services, organizations can transform raw data into valuable insights, enabling better decision-making and competitive advantage.

youtube

#aws cloud data engineer course#aws cloud data engineer training#aws data engineer course#aws data engineer course online#Youtube

0 notes

Text

Essential Skills for a Successful Data Science Career: A Comprehensive Guide

Data science has evolved into one of the most exciting and in-demand fields in the tech industry. As companies increasingly rely on data-driven decision-making, the need for professionals who can extract meaningful insights from data continues to rise. But what skills are necessary to succeed in this dynamic field? In this guide, we’ll explore the critical technical, analytical, and soft skills required to thrive as a data scientist.

Core Skills Required for Data Science

Becoming an effective data scientist requires a well-rounded skill set, combining technical expertise with problem-solving and communication abilities. Here are the essential skills you’ll need:

1. Proficiency in Programming

Coding is at the heart of data science. The most important programming languages include:

Python – The go-to language for data science due to its versatility and extensive libraries like Pandas, NumPy, Scikit-Learn, and TensorFlow.

R – A favorite for statistical analysis and data visualization.

SQL – Essential for managing and querying large datasets stored in relational databases.

Java/Scala – Commonly used in big data environments such as Apache Spark.

2. Strong Foundation in Mathematics and Statistics

Understanding mathematical concepts is crucial for making sense of data and building machine learning models. Key areas include:

Probability and Statistics – Used in hypothesis testing, predictive modeling, and data distributions.

Linear Algebra – Essential for understanding machine learning algorithms and data transformations.

Calculus – Important for optimization techniques, particularly in deep learning.

3. Machine Learning and Artificial Intelligence

Data scientists must be comfortable with machine learning techniques to develop predictive models. Some key areas include:

Supervised and Unsupervised Learning – Understanding how different models work and when to apply them.

Deep Learning – Utilizing neural networks and tools like TensorFlow and PyTorch.

Model Evaluation and Tuning – Techniques such as cross-validation, hyperparameter tuning, and feature engineering.

4. Data Wrangling and Preprocessing

Before deriving insights, raw data must be cleaned and prepared. This involves:

Handling missing values and outliers.

Transforming data into a usable format.

Merging and manipulating datasets efficiently.

5. Big Data Technologies

As datasets grow in complexity and size, knowledge of big data tools is increasingly valuable. Common tools include:

Apache Hadoop

Apache Spark

Google BigQuery

Amazon Redshift

6. Data Visualization and Storytelling

Communicating insights effectively is just as important as analyzing data. Popular visualization tools include:

Matplotlib and Seaborn (Python)

Tableau

Power BI

Google Data Studio

7. Cloud Computing and Model Deployment

With more companies leveraging cloud-based solutions, familiarity with cloud platforms is a must:

Amazon Web Services (AWS) – Services like S3, EC2, and SageMaker.

Google Cloud Platform (GCP) – Includes BigQuery, Vertex AI, and Cloud ML Engine.

Microsoft Azure – Features like Azure Machine Learning and Synapse Analytics.

8. Business Acumen and Industry Knowledge

Understanding how data science applies to business problems is key. Important aspects include:

Defining business challenges and aligning them with data-driven solutions.

Evaluating the impact of machine learning models on business operations.

Presenting findings in a way that decision-makers can act on.

9. Communication and Collaboration

Data scientists must bridge the gap between technical teams and business leaders. Effective communication skills help in:

Explaining complex data insights in simple terms.

Writing clear reports and documentation.

Collaborating with teams including engineers, analysts, and executives.

How to Build and Strengthen Your Data Science Skills

Mastering data science requires dedication, continuous learning, and hands-on practice. Here are some ways to build your expertise:

1. Enroll in a High-Quality Data Science Program

A structured learning path can accelerate your progress. One of the best institutions offering industry-relevant programs is the Boston Institute of Analytics (BIA).

Boston Institute of Analytics – Best Online Data Science Programs

BIA offers comprehensive online data science courses tailored for aspiring professionals. These programs cover:

Python and R programming

Machine learning and AI fundamentals

Big data technologies and cloud computing

Data visualization and storytelling

Business analytics applications

Why Choose BIA?

Industry-Aligned Curriculum – Courses designed in collaboration with data science experts.

Hands-On Learning – Real-world case studies and projects.

Career Support & Certification – Globally recognized credentials with job placement assistance.

Flexible Learning Options – Online and hybrid learning models available.

2. Work on Practical Projects

Gaining real-world experience is crucial for developing confidence and showcasing your abilities. Participate in:

Kaggle competitions.

Open-source projects on GitHub.

Personal projects using datasets from sources like Google Colab or UCI Machine Learning Repository.

3. Join the Data Science Community

Engaging with other professionals helps in knowledge sharing and networking. Join:

Kaggle forums.

Medium’s Towards Data Science blog.

Google Developer Groups and online meetups.

4. Stay Updated with Industry Trends

Technology in data science evolves rapidly. To stay ahead, follow:

AI and data science research from Google AI and OpenAI.

Online courses from platforms like Coursera, Udacity, and edX.

Webinars and podcasts featuring leading data scientists.

Conclusion

Succeeding in data science requires a blend of programming, analytical, and business skills. From mastering machine learning to communicating insights effectively, a well-rounded skill set will set you apart in this competitive field. If you’re looking for a structured learning approach, enrolling in a recognized program like the Boston Institute of Analytics’ Best Online Data Science Programs can provide the guidance and hands-on experience needed.

By continually learning, engaging with the data science community, and working on real-world problems, you can build a successful career in this exciting and ever-evolving field.

#best data science institute#data science course#data science training#AI Training Program#Best Online Data Science Programs#Data Science Program

0 notes

Text

A Deep Dive into Amazon Redshift: Your Guide to Cloud Data Warehousing

In the era of big data, organizations are increasingly turning to cloud solutions for efficient data management and analysis. Amazon Redshift, a prominent service from Amazon Web Services (AWS), has become a go-to choice for businesses looking to optimize their data warehousing capabilities. In this blog, we will explore what Amazon Redshift is, its architecture, features, and how it can transform your approach to data analytics.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

What is Amazon Redshift?

Amazon Redshift is a fully managed, cloud-based data warehouse service designed to handle large-scale data processing and analytics. It enables businesses to analyze vast amounts of structured and semi-structured data quickly and efficiently. With its architecture tailored for high performance, Redshift allows users to run complex queries and generate insights in real time.

The Architecture of Amazon Redshift

Understanding the architecture of Redshift is crucial to appreciating its capabilities. Here are the key components:

1. Columnar Storage

Unlike traditional row-based databases, Redshift uses a columnar storage model. This approach allows for more efficient data retrieval, as only the necessary columns are accessed during queries, significantly speeding up performance.

2. Massively Parallel Processing (MPP)

Redshift employs a massively parallel processing architecture, distributing workloads across multiple nodes. This means that queries can be processed simultaneously, enhancing speed and efficiency.

3. Data Compression

Redshift automatically compresses data to save storage space and improve query performance. By reducing the amount of data that needs to be scanned, it accelerates query execution.

4. Snapshots and Backups

Redshift provides automated snapshots of your data warehouse. This feature ensures data durability and allows for easy restoration in case of failure, enhancing data security.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

Key Features of Amazon Redshift

1. Scalability

Redshift is designed to grow with your data. You can start with a small data warehouse and scale up to petabytes as your data needs expand. This scalability is vital for businesses experiencing rapid growth.

2. Integration with AWS Ecosystem

As part of AWS, Redshift integrates seamlessly with other services like Amazon S3, AWS Glue, and Amazon QuickSight. This integration simplifies data ingestion, transformation, and visualization, creating a cohesive data ecosystem.

3. Advanced Security Features

Redshift offers robust security measures, including data encryption, network isolation with Amazon VPC, and user access controls through AWS IAM. This ensures that your data remains secure and compliant with industry standards.

4. Cost-Effectiveness

With a pay-as-you-go pricing model, Redshift allows businesses to optimize costs based on their usage. Options for reserved instances further enhance cost savings, making it an attractive choice for organizations of all sizes.

Redshift supports a wide range of analytical queries, empowering businesses to explore data trends, customer behavior, and operational efficiencies comprehensively.

Conclusion

Amazon Redshift stands out as a powerful solution for cloud data warehousing and analytics. Its scalable architecture, high performance, and seamless integration with other AWS services make it an ideal choice for businesses looking to leverage their data effectively.

Whether you're a small startup or a large enterprise, Redshift can provide the tools you need to make data-driven decisions and stay competitive in today's data-centric landscape.

0 notes

Text

Real-Time Data Processing with Amazon Kinesis

Real-Time Data Processing with Amazon Kinesis

Amazon Kinesis is a fully managed AWS service designed for real-time data streaming and processing.

It allows organizations to ingest, process, and analyze large volumes of streaming data from various sources, such as application logs, IoT devices, social media feeds, and event-driven applications.

Key Components of Amazon Kinesis

Kinesis Data Streams — Enables real-time ingestion and processing of high-throughput streaming data.

Kinesis Data Firehose — Automatically loads streaming data into AWS services like S3, Redshift, and Elasticsearch.

Kinesis Data Analytics — Runs SQL-based queries on real-time data streams for insights.

Kinesis Video Streams — Streams and processes live video from connected devices.

How Amazon Kinesis

Works Data Ingestion — Captures real-time data from various sources.

Processing & Transformation — Uses Kinesis Data Analytics or AWS Lambda for real-time data transformation.

Storage & Analysis — Sends processed data to destinations like Amazon Redshift, S3, or dashboards for visualization.

Use Cases of Amazon Kinesis

Real-time log monitoring and anomaly detection Live video streaming analytics IoT data processing Fraud detection in financial transactions Clickstream analytics for user behavior tracking

0 notes

Text

Data Pipeline Architecture for Amazon Redshift: An In-Depth Guide

In the era of big data and analytics, Amazon Redshift stands out as a popular choice for managing and analyzing vast amounts of structured and semi-structured data. To leverage its full potential, a well-designed data pipeline for Amazon Redshift is crucial. This article explores the architecture of a robust data pipeline tailored for Amazon Redshift, detailing its components, workflows, and best practices.

Understanding a Data Pipeline for Amazon Redshift

A data pipeline is a series of processes that extract, transform, and load (ETL) data from various sources into a destination system for analysis. In the case of Amazon Redshift, the pipeline ensures data flows seamlessly from source systems into this cloud-based data warehouse, where it can be queried and analyzed.

Key Components of a Data Pipeline Architecture

A comprehensive data pipeline for Amazon Redshift comprises several components, each playing a pivotal role:

Data Sources These include databases, APIs, file systems, IoT devices, and third-party services. The data sources generate raw data that must be ingested into the pipeline.

Ingestion Layer The ingestion layer captures data from multiple sources and transports it into a staging area. Tools like AWS DataSync, Amazon Kinesis, and Apache Kafka are commonly used for this purpose.

Staging Area Before data is loaded into Amazon Redshift, it is often stored in a temporary staging area, such as Amazon S3. This step allows preprocessing and ensures scalability when handling large data volumes.

ETL/ELT Processes The ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) layer prepares the data for Redshift. Tools like AWS Glue, Apache Airflow, and Matillion help clean, transform, and structure data efficiently.

Amazon Redshift The central data warehouse where transformed data is stored and optimized for querying. Amazon Redshift's columnar storage and MPP (Massively Parallel Processing) architecture make it ideal for analytics.

Data Visualization and Analytics Tools like Amazon QuickSight, Tableau, or Power BI connect to Redshift to visualize and analyze the data, providing actionable insights.

Data Pipeline Workflow

A well-designed pipeline operates in sequential or parallel workflows, depending on the complexity of the data and the business requirements:

Data Extraction: Data is extracted from source systems and moved into a staging area, often with minimal transformations.

Data Transformation: Raw data is cleaned, enriched, and structured to meet the schema and business logic requirements of Redshift.

Data Loading: Transformed data is loaded into Amazon Redshift tables using COPY commands or third-party tools.

Data Validation: Post-load checks ensure data accuracy, consistency, and completeness.

Automation and Monitoring: Scheduled jobs and monitoring systems ensure the pipeline runs smoothly and flags any issues.

Best Practices for Data Pipeline Architecture

To maximize the efficiency and reliability of a data pipeline for Amazon Redshift, follow these best practices:

Optimize Data Ingestion

Use Amazon S3 as an intermediary for large data transfers.

Compress and partition data to minimize storage and improve query performance.

Design for Scalability

Choose tools and services that can scale as data volume grows.

Leverage Redshift's spectrum feature to query data directly from S3.

Prioritize Data Quality

Implement rigorous data validation and cleansing routines.

Use AWS Glue DataBrew for visual data preparation.

Secure Your Pipeline

Use encryption for data at rest and in transit.

Configure IAM roles and permissions to restrict access to sensitive data.

Automate and Monitor

Schedule ETL jobs with AWS Step Functions or Apache Airflow.

Set up alerts and dashboards using Amazon CloudWatch to monitor pipeline health.

Tools for Building a Data Pipeline for Amazon Redshift

Several tools and services streamline the process of building and managing a data pipeline for Redshift:

AWS Glue: A serverless data integration service for ETL processes.

Apache Airflow: An open-source tool for workflow automation and orchestration.

Fivetran: A SaaS solution for automated data integration.

Matillion: A cloud-native ETL tool optimized for Redshift.

Amazon Kinesis: A service for real-time data streaming into Redshift.

Benefits of a Well-Architected Data Pipeline

A robust data pipeline for Amazon Redshift provides several advantages:

Efficiency: Automates complex workflows, saving time and resources.

Scalability: Handles growing data volumes seamlessly.

Reliability: Ensures consistent data quality and availability.

Actionable Insights: Prepares data for advanced analytics and visualization.

Conclusion

Designing an efficient data pipeline for Amazon Redshift is vital for unlocking the full potential of your data. By leveraging modern tools, adhering to best practices, and focusing on scalability and security, businesses can streamline their data workflows and gain valuable insights. Whether you’re a startup or an enterprise, a well-architected data pipeline ensures that your analytics capabilities remain robust and future-ready. With the right pipeline in place, Amazon Redshift can become the backbone of your data analytics strategy, helping you make data-driven decisions with confidence.

0 notes

Text

7 Best Data Warehouse Tools to Explore in 2025

What is a Data Warehouse?

A data warehouse is a centralized repository designed to store large volumes of data from various sources in an organized, structured format. It facilitates efficient querying, analysis, and reporting of data, serving as a vital component for business intelligence and analytics.

Types of Data Warehouses

Data warehouses can be classified into the following categories:

Enterprise Data Warehouse (EDW): A unified storage hub for all enterprise data.

Operational Data Store (ODS): Stores frequently updated, real-time data.

Online Analytical Processing (OLAP): Designed for complex analytical queries on large datasets.

Data Mart: A focused subset of a data warehouse for specific departments or business units.

Why Use Data Warehouses?

The primary purpose of data warehouses is to store and organize data centrally, enabling faster and more efficient analysis of large datasets. Other benefits include:

Improved Data Quality: Processes ensure data integrity and consistency.

Historical Data Storage: Supports trend analysis and forecasting.

Enhanced Accessibility: Allows seamless access and querying of data from multiple sources.

Who Uses Data Warehouses?

Data warehouses cater to various professionals across industries:

Data Analysts: Query and analyze data for actionable insights.

Data Engineers: Build and maintain the underlying infrastructure.

Business Intelligence Analysts: Generate reports and visualizations for stakeholders.

Analytics Engineers: Optimize data pipelines for efficient loading.

Companies often use data warehouses to store vast amounts of customer data, sales information, and financial records. Modern trends include adopting data lakes and data lake houses for advanced analytics.

Top Data Warehouse Tools to Watch in 2025

1. Snowflake

Snowflake is a cloud-native data warehouse renowned for its flexibility, security, and scalability.

Key Features:

Multi-cluster Architecture: Supports scalability and separates compute from storage.

Virtual Warehouses: On-demand setup for parallel workload handling.

Data Sharing: Facilitates secure data sharing across organizations.

Snowflake integrates seamlessly with tools like dbt, Tableau, and Looker, making it a cornerstone of the modern data stack.

2. Amazon S3

Amazon S3 is a highly scalable, object-based storage service, widely used as a data warehousing solution.

Key Features:

Scalability: Capable of handling any data volume.

AWS Ecosystem Integrations: Enhances processing and analytics workflows.

Cost-effectiveness: Pay-as-you-go pricing model.

Ideal for organizations already leveraging AWS services, Amazon S3 offers unparalleled flexibility and durability.

3. Google Big Query

Google Big Query is a server less, highly scalable solution designed for real-time insights.

Key Features:

Fast Querying: Processes petabytes of data in seconds.

Automatic Scaling: No manual resource management required.

Integrated Machine Learning: Supports advanced analytics.

Big Query’s seamless integration with Google Cloud services and third-party tools makes it a top choice for modern data stacks.

4. Data bricks

Data bricks is a unified analytics platform combining data engineering, science, and business intelligence.

Key Features:

Spark-based Engine: Enables fast, large-scale data processing.

ML flow: Streamlines machine learning lifecycle management.

Real-time Analytics: Processes streaming data effortlessly.

Data bricks supports Python, SQL, R, and Scala, appealing to diverse data professionals.

5. Amazon Redshift

Amazon Redshift is a fully managed, high-performance data warehouse tailored for structured and semi-structured data.

Key Features:

Columnar Storage: Optimized query performance.

Massively Parallel Processing (MPP): Accelerates complex queries.

AWS Integrations: Works well with S3, DynamoDB, and Elastic MapReduce.

Its scalability and cost-effectiveness make it popular among startups and enterprises alike.

6. Oracle Autonomous Data Warehouse

Oracle Autonomous Data Warehouse automates the creation and management of data warehouses using machine learning.

Key Features:

Autonomous Operations: Self-tuning and optimized storage.

Elastic Scalability: Adjusts resources dynamically based on workload.

Built-in ML Algorithms: Facilitates advanced analytics.

Best suited for enterprises seeking robust, automated solutions with high performance.

7. PostgreSQL

PostgreSQL is a versatile, open-source relational database that supports data warehousing needs.

Key Features:

ACID Compliance: Ensures data integrity.

Multi-version Concurrency Control (MVCC): Allows simultaneous access.

Extensibility: Offers plugins like PostgreSQL Data Warehousing by Citus.

Its robust community support and adaptability make PostgreSQL a reliable choice for organizations of all sizes.

Next Steps

Key Takeaways:

Data warehouses enable efficient organization and analysis of large datasets.

Popular tools include Snowflake, Amazon S3, Google BigQuery, Databricks, Amazon Redshift, Oracle, and PostgreSQL.

How to Advance Your Knowledge:

Explore Data Analytics Tools: Get acquainted with platforms like Tableau and dbt.

Learn Data Analytics: Try Career Foundry’s free, 5-day data analytics short course.

Join Live Events: Participate in online events with industry experts.

Take the first step towards becoming a data analyst. Enroll in Career Foundry’s data analytics program and unlock a new career path today.

0 notes

Text

Power BI Training | Power BI Online Training

Power BI Training: What Types of Data Can Power BI Handle?

Power BI Training equips individuals and businesses with the skills to transform raw data into actionable insights. One of the primary reasons Power BI has gained immense popularity in recent years is its unparalleled ability to handle a wide variety of data types. Whether you’re analyzing financial reports, visualizing customer trends, or integrating cloud data sources, Power BI is a versatile tool that supports diverse data formats and systems. With the growing demand for Power BI Online Training, users can now easily master the techniques to utilize its capabilities and integrate data from multiple sources seamlessly.

In this article, we will explore the types of data that Power BI can handle and highlight how the tool empowers users to extract value from various data streams. From relational databases to real-time cloud data, understanding Power BI's compatibility with different data formats is essential for both beginners and advanced users.

Relational Databases: The Foundation of Business Insights

Relational databases are among the most commonly used data sources in businesses. Power BI connects seamlessly with relational databases such as SQL Server, MySQL, Oracle Database, and PostgreSQL. These databases store structured data in tables, making them ideal for generating reports and dashboards. Power BI Training emphasizes leveraging these connections to perform efficient data modeling, create calculated columns, and design measures using DAX (Data Analysis Expressions).

By utilizing Power BI's built-in data connectors, users can import large volumes of structured data without compromising performance. Whether it’s sales data, inventory records, or employee metrics, Power BI empowers users to uncover insights through its interactive dashboards.

Excel and CSV Files: Simplicity Meets Functionality

Power BI is widely known for its compatibility with Excel and CSV files, making it a favourite tool among professionals who work with these formats. Many organizations rely on spreadsheets for budgeting, forecasting, and reporting. Power BI allows users to directly import and transform this data into visually appealing reports.

With Power BI Online Training, users can also learn how to automate the refresh of data from Excel files stored on One Drive or SharePoint. This ensures that reports and dashboards remain up-to-date without manual intervention. Its drag-and-drop interface simplifies the process of merging multiple datasets, enabling users to create comprehensive views of their business operations.

Cloud Data Sources: Real-Time Data at Your Fingertips

As businesses transition to cloud-based platforms, the ability to analyze real-time data has become critical. Power BI supports a wide range of cloud-based data sources, including Azure SQL Database, Google BigQuery, Amazon Redshift, and Salesforce. This capability allows users to connect to live datasets and monitor key performance indicators (KPIs) in real time.

Power BI Training often covers best practices for working with cloud data, including configuring gateways and optimizing queries for faster performance. This is particularly useful for businesses that rely on SaaS (Software as a Service) applications for their day-to-day operations. Real-time analytics in Power BI can drive immediate decision-making, helping businesses stay competitive in dynamic markets.

Unstructured Data: Making Sense of Complexity

While structured data is easy to analyze, unstructured data presents a unique challenge. Unstructured data includes formats like text files, PDFs, social media content, and multimedia files. With the help of tools like Power BI and its integration with Azure Cognitive Services, users can analyze and visualize unstructured data effectively.

For example, sentiment analysis can be performed on customer reviews or social media posts, providing businesses with valuable insights into consumer behaviour. Advanced Power BI Online Training delves into techniques for handling unstructured data, including natural language processing (NLP) and custom visualizations.

Big Data: Harnessing Volume, Variety, and Velocity

In the era of big data, organizations must process large datasets that often come from diverse sources. Power BI integrates seamlessly with big data platforms like Hadoop, Apache Spark, and Azure Data Lake. These integrations enable users to analyze massive datasets without requiring extensive technical expertise.

During Power BI Training, participants learn how to use DirectQuery and import modes to optimize big data workflows. DirectQuery allows users to query large datasets without importing them into Power BI, which is particularly useful for businesses dealing with high-volume data streams. These capabilities make Power BI an essential tool for industries such as finance, healthcare, and retail.

APIs and Custom Data Sources: Flexibility Redefined

Power BI's ability to connect with APIs (Application Programming Interfaces) opens up a world of possibilities for developers and data analysts. APIs allow Power BI to pull data from custom sources, including proprietary systems and third-party applications. By configuring API connections, users can create custom dashboards tailored to their specific needs.

Through Power BI Online Training, users can learn how to set up and manage these custom integrations. This feature is particularly beneficial for organizations that rely on niche software solutions for their operations. With Power BI, even the most complex data sources can be visualized and shared across teams.

IoT Data: Driving Innovation with Real-Time Insights

The Internet of Things (IoT) has revolutionized industries by providing real-time data from sensors, devices, and machines. Power BI supports IoT data integration through Azure IoT Hub, enabling users to monitor equipment performance, track energy consumption, or analyze logistics operations in real time.

Participants in Power BI Training gain hands-on experience in working with IoT data, learning how to build dynamic dashboards that capture and visualize live data streams. These capabilities are critical for industries like manufacturing, transportation, and energy, where timely insights can prevent downtime and improve efficiency.

Conclusion

Power BI's versatility lies in its ability to handle a wide range of data types, from structured and unstructured datasets to real-time IoT and big data sources. By integrating with relational databases, spreadsheets, cloud services, and APIs, Power BI has established itself as a comprehensive tool for data analysis and visualization.

For professionals and organizations looking to harness the full potential of this tool, Power BI Training provides the foundation needed to master its features. Whether you're a beginner or an experienced analyst, Power BI Online Training ensures that you can work efficiently with various data formats and create impactful reports. With Power BI, the possibilities for data-driven decision-making are virtually limitless.

Visualpath is the Leading and Best Institute for learning in Hyderabad. We provide Power BI Course Online. You will get the best course at an affordable cost.

Attend Free Demo

Call on – +91-9989971070

Blog: https://visualpathblogs.com/

What’s App: https://www.whatsapp.com/catalog/919989971070/

Visit: https://www.visualpath.in/powerbi-training.html

#Power BI Training#Power BI Online Training#Power BI Course Online#Power BI Training in Hyderabad#Power BI Training in Ameerpet#Power BI Training Institute in Hyderabad#Power BI Online Training Hyderabad#Power BI Course in Hyderabad

1 note

·

View note

Text

Amazon QuickSight Training Course | AWS QuickSight Online Training

AWS QuickSight vs. Tableau: Which Data Visualization Tool is Right for You?

Amazon QuickSight Training, you're likely exploring advanced business intelligence and data visualization tools to elevate your analytical capabilities. AWS QuickSight and Tableau are two leading solutions in this domain, each with unique features catering to diverse user needs. Whether you're a business looking for cost efficiency or a professional seeking robust features, choosing the right tool is crucial.

Overview of AWS QuickSight and Tableau

AWS QuickSight, Amazon's cloud-based BI solution, is designed to integrate seamlessly with other AWS services. It enables users to analyze data and share insights through interactive dashboards. On the other hand, Tableau, now part of Salesforce, is a veteran in the BI space, renowned for its user-friendly interface and extensive capabilities in data analysis.

AWS QuickSight shines with its cost-effectiveness and integration with Amazon Web Services, making it a favorite for businesses already using AWS. Tableau, however, excels in providing detailed, customizable dashboards and advanced analytics, catering to users needing more granular control.

Ease of Use

For beginners, AWS QuickSight offers a simpler, more intuitive interface, making it an excellent choice for users who prefer to avoid steep learning curves. Many users who undergo AWS QuickSight Online Training appreciate its guided learning paths and ease of implementation, especially when managing data from AWS sources. Its automated insights feature allows for faster decision-making, a key advantage for businesses with tight deadlines.

Tableau, while robust, has a steeper learning curve. Advanced users or those familiar with similar tools will find its extensive customization options invaluable. However, for new users, investing time in training is necessary to harness its full potential.

Integration Capabilities

AWS QuickSight integrates effortlessly with Amazon’s ecosystem, such as S3, Redshift, and RDS. This makes it a preferred choice for businesses already operating within the AWS framework. By enrolling in Amazon QuickSight Training, users can master these integrations, leveraging them to drive better decision-making.

Tableau, on the other hand, offers broad integration capabilities beyond cloud services, supporting various databases, third-party apps, and cloud platforms like Google Cloud and Azure. This flexibility makes it ideal for companies with heterogeneous IT infrastructures.

Scalability and Performance

AWS QuickSight boasts impressive scalability, making it a go-to option for businesses experiencing rapid growth. Its pay-per-session pricing model ensures affordability, even as user demand scales. This feature is highly valued by startups and SMBs, where cost management is crucial. QuickSight's serverless architecture means performance remains high, regardless of user volume, which is emphasized in AWS QuickSight Online Training modules.

Tableau provides excellent performance for static environments but may require additional resources for scaling, especially in enterprise setups. Tableau’s licensing can also be cost-prohibitive for smaller teams, making AWS QuickSight a more economical alternative in such scenarios.

Customization and Advanced Features

For users seeking deep customization and advanced analytics, Tableau has the edge. Its vast library of pre-built visualizations and tools like Tableau Prep for data cleaning are unmatched. However, AWS QuickSight has been catching up with features like SPICE (Super-fast, Parallel, In-memory Calculation Engine) and ML Insights. These innovations enable QuickSight to deliver insights faster and support advanced analytical needs, which are integral to any Amazon QuickSight Training curriculum.

Cost Considerations

AWS QuickSight is known for its cost-effective pricing, particularly its pay-per-session model, which eliminates the need for upfront investments. This makes it accessible to businesses of all sizes. Tableau, while offering rich features, follows a subscription-based pricing model that can be expensive, especially for large teams or enterprise setups. For organizations looking to maximize their ROI, AWS QuickSight Online Training can help users extract maximum value from this tool.

Key Use Cases

AWS QuickSight: Ideal for organizations deeply integrated with AWS, looking for scalable, cost-effective BI tools.

Tableau: Best suited for businesses requiring highly detailed analytics and those with diverse IT infrastructures.

Why Training is Essential?

For both tools, training plays a crucial role in maximizing their potential. Whether it's mastering AWS QuickSight’s seamless AWS integrations or Tableau’s intricate visualization capabilities, a structured learning path is essential. Enrolling in Amazon QuickSight Training or other specialized courses ensures users can confidently navigate features, optimize workflows, and derive actionable insights.

Conclusion

Both AWS QuickSight and Tableau have unique strengths, making them suitable for different scenarios. AWS QuickSight’s simplicity, cost-effectiveness, and integration with the AWS ecosystem make it an excellent choice for small to medium-sized businesses and startups. Tableau, with its advanced customization and broader integration capabilities, is a better fit for enterprises needing sophisticated analytics.

By enrolling in Amazon QuickSight Training or AWS QuickSight Online Training, users can develop the skills necessary to unlock the full potential of these tools. Ultimately, the choice between AWS QuickSight and Tableau depends on your specific business needs, budget, and the level of complexity required in your data visualization efforts. Both are powerful tools that can transform how businesses interact with and interpret their data, driving smarter decisions and better outcomes.

Visualpath offers AWS QuickSight Online Training for the next generation of intelligent business applications. AWS QuickSight Training in Hyderabad from industry experts and gain hands-on experience with our interactive program. Accessible globally, including in the USA, UK, Canada, Dubai, and Australia. With daily recordings and presentations available for later review. To book a free demo session, for more info, call +91-9989971070.

Key Points: AWS, Amazon S3, Amazon Redshift, Amazon RDS, Amazon Athena, AWS Glue, Amazon DynamoDB, AWS IoT Analytics, ETL Tools.

Attend Free Demo

Call Now: +91-9989971070

Whatsapp: https://www.whatsapp.com/catalog/919989971070

Visit our Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-amazon-quicksight-training.html

#Amazon QuickSight Training#AWS QuickSight Online Training#Amazon QuickSight Course Online#AWS QuickSight Training in Hyderabad#Amazon QuickSight Training Course#AWS QuickSight Training

0 notes

Text

Amazon Redshift: A Quick-Start Guide To Data Warehousing

Amazon Redshift offers the finest price-performance cloud data warehouse to support data-driven decision-making.

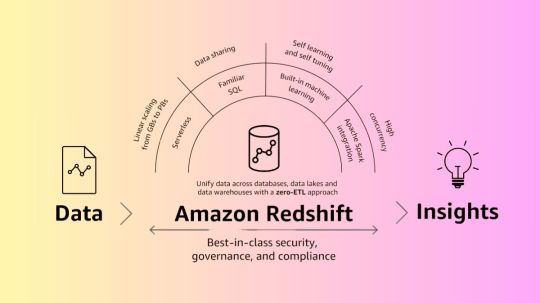

What is Amazon Redshift?

Amazon Redshift leverages machine learning and technology created by AWS to provide the greatest pricing performance at any scale, utilizing SQL to analyze structured and semi-structured data across data lakes, operational databases, and data warehouses.

With only a few clicks and no data movement or transformation, you can break through data silos and obtain real-time and predictive insights on all of your data.

With performance innovation out of the box, you may achieve up to three times higher pricing performance than any other cloud data warehouse without paying extra.

Use a safe and dependable analytics solution to turn data into insights in a matter of seconds without bothering about infrastructure administration.

Why Amazon Redshift?

Every day, tens of thousands of customers utilize Amazon Redshift to deliver insights for their organizations and modernize their data analytics workloads. Amazon Redshift’s fully managed, AI-powered massively parallel processing (MPP) architecture facilitates swift and economical corporate decision-making. With AWS’s zero-ETL strategy, all of your data is combined for AI/ML applications, near real-time use cases, and robust analytics. With the help of cutting-edge security features and fine-grained governance, data can be shared and collaborated on safely and quickly both inside and between businesses, AWS regions, and even third-party data providers.

Advantages

At whatever size, get the optimal price-performance ratio

With a fully managed, AI-powered, massively parallel processing (MPP) data warehouse designed for speed, scale, and availability, you can outperform competing cloud data warehouses by up to six times.

Use zero-ETL to unify all of your data

Use a low-code, zero-ETL strategy for integrated analytics to quickly access or ingest data from your databases, data lakes, data warehouses, and streaming data.

Utilize thorough analytics and machine learning to optimize value

Utilize your preferred analytics engines and languages to run SQL queries, open source analytics, power dashboards and visualizations, and activate near real-time analytics and AI/ML applications.

Use safe data cooperation to innovate more quickly

With fine-grained governance, security, and compliance, you can effortlessly share and collaborate on data both inside and between your businesses, AWS regions, and even third-party data sets without having to move or copy data by hand.

How it works

In order to provide the best pricing performance at any scale, Amazon Redshift leverages machine learning and technology created by AWS to analyze structured and semi-structured data from data lakes, operational databases, and data warehouses using SQL.

Use cases

Boost demand and financial projections

Allows you to create low latency analytics apps for fraud detection, live leaderboards, and the Internet of Things by consuming hundreds of megabytes of data per second.

Make the most of your business intelligence

Using BI tools like Microsoft PowerBI, Tableau, Amazon QuickSight, and Amazon Redshift, create insightful reports and dashboards.

Quicken SQL machine learning

To support advanced analytics on vast amounts of data, SQL can be used to create, train, and implement machine learning models for a variety of use cases, such as regression, classification, and predictive analytics.

Make money out of your data

Create apps using all of your data from databases, data lakes, and data warehouses. To increase consumer value, monetize your data as a service, and open up new revenue sources, share and work together in a seamless and safe manner.

Easily merge your data with data sets from outside parties

Subscribe to and merge third-party data in AWS Data Exchange with your data in Amazon Redshift, whether it’s market data, social media analytics, weather data, or more, without having to deal with licensing, onboarding, or transferring the data to the warehouse.

Amazon Redshift concepts

Amazon Redshift Serverless helps you examine data without provisioning a data warehouse. Automatic resource provisioning and intelligent data warehouse capacity scaling ensure quick performance for even the most demanding and unpredictable applications. The data warehouse is free when idle, so you only pay for what you use. The Amazon Redshift query editor v2 or your favorite BI tool lets you load data and query immediately. Take advantage of the greatest pricing performance and familiar SQL capabilities in a zero-administration environment.

If your company is eligible and your cluster is being formed in an AWS Region without Amazon Redshift Serverless, you may be eligible for the free trial. Choose Production or Free trial to answer. For what will you use this cluster? Free trial creates a dc2.large node configuration. AWS Regions with Amazon Redshift Serverless are included in the Amazon Web Services General Reference’s Redshift Serverless API endpoints.

Key Amazon Redshift Serverless ideas are below

Namespace: Database objects and users are in a namespace. Amazon Redshift Serverless namespaces contain schemas, tables, users, datashares, and snapshots.

Workgroup: A collection of computer resources. Amazon Redshift Serverless computes in workgroups. Redshift Processing Units, security groups, and use limits are examples. Configure workgroup network and security settings using the Amazon Redshift Serverless GUI, AWS Command Line Interface, or APIs.

Important Amazon Redshift supplied cluster concepts:

Cluster: A cluster is an essential part of an Amazon Redshift data warehouse’s infrastructure.

A cluster has compute nodes. Compiled code runs on compute nodes.

An additional leader node controls two or more computing nodes in a cluster. Business intelligence tools and query editors communicate with the leader node. Your client application only talks to the leader. External apps can see computing nodes.

Database: A cluster contains one or more databases.

One or more computing node databases store user data. SQL clients communicate with the leader node, which organizes compute node queries. Read about compute and leader nodes in data warehouse system design. User data is grouped into database schemas.

Amazon Redshift is compatible with other RDBMSs. It supports OLTP functions including inserting and removing data like a standard RDBMS. Amazon Redshift excels at batch analysis and reporting.

Amazon Redshift’s typical data processing pipeline and its components are described below.

A example Amazon Redshift data processing path is shown below.Image credit to AWS

An enterprise-class relational database query and management system is Amazon Redshift. Business intelligence (BI), reporting, data, and analytics solutions can connect to Amazon Redshift. Analytic queries retrieve, compare, and evaluate vast volumes of data in various stages to obtain a result.

Multiple data sources upload structured, semistructured, and unstructured data to the data storage layer at the data ingestion layer. This data staging section holds data in various consumption readiness phases. Storage may be an Amazon S3 bucket.

The optional data processing layer preprocesses, validates, and transforms source data using ETL or ELT pipelines. ETL procedures enhance these raw datasets. ETL engines include AWS Glue.

Read more on govindhtech.com

#AmazonRedshift#QuickStartGuide#DataWarehousing#machinelearning#AWSzeroETLstrategy#datawarehouse#AmazonS3#data#aws#news#realtimeanalytics#AmazonQuickSight#technology#technews#govindhtech

0 notes

Text

AWS Data Analytics Services

AWS Data Analytics services provide a comprehensive suite of tools to help organizations collect, process, analyze, and visualize vast amounts of data efficiently. These services include Amazon Redshift for scalable data warehousing, Amazon Athena for querying data in S3 using SQL, AWS Glue for data preparation and ETL (extract, transform, load), and Amazon Kinesis for real-time data streaming. Additionally, Amazon QuickSight offers intuitive data visualization and reporting, while AWS Data Pipeline automates the movement and transformation of data between different AWS compute and storage services. These services help businesses unlock insights from their data quickly and securely, with the ability to scale as data volumes grow.

0 notes

Text

The Benefits of MySQL to Redshift Migration Using Ask On Data

In today's data-driven landscape, organizations are constantly seeking ways to optimize their data management and analytics capabilities. One effective strategy is the migration from MySQL to Amazon Redshift. This shift can unlock significant advantages, especially when facilitated by tools like Ask On Data. In this article, we’ll explore the benefits of MySQL to Redshift migration and how Ask On Data simplifies the process.

Enhanced Performance and Scalability

One of the primary benefits of migrating your data from MySQL to Redshift is enhanced performance. Redshift is a fully managed data warehouse that can handle large volumes of data and complex queries efficiently. Its architecture is designed for high-speed data retrieval and analytics, making it an ideal choice for organizations looking to scale their operations. As data grows, Redshift can seamlessly accommodate increased workloads, allowing businesses to expand without performance degradation.

Cost-Effective Data Storage

Another advantage of MySQL to Redshift migration is the cost-effectiveness of Redshift’s storage solutions. Redshift employs a columnar storage format, which is more efficient than the row-based storage of MySQL. This means that you can store more data at a lower cost while still enjoying fast query performance. For businesses that are cost-conscious, this is a significant factor in favor of making the switch.

Advanced Analytics Capabilities

Redshift is specifically designed for analytics, offering advanced features that MySQL may lack. With support for complex queries, large datasets, and integration with various data visualization tools, migrating to Redshift opens up new opportunities for deep data analysis. Organizations can perform real-time analytics, derive insights, and make data-driven decisions faster than ever.

Simplified Data Migration with Ask On Data

While the benefits of migrating your datafrom MySQL to Redshift are clear, the process can be daunting without the right tools. This is where Ask On Data comes into play. Ask On Data is an NLP-based data migration tool that simplifies the migration process significantly. With its user-friendly interface, you can easily interact with your data using natural language queries, reducing the need for complex coding or technical expertise.

Ask On Data streamlines the entire process of migrating your data from MySQL to Redshift. It efficiently extracts data from MySQL, transforms it to meet Redshift’s schema requirements, and loads it into the target environment—all while ensuring data integrity. This automation not only saves time but also minimizes the risk of errors, making the migration process more reliable.

Real-Time Monitoring and Validation

One of the standout features of Ask On Data is its real-time monitoring capabilities. Users can track the progress of their MySQL to Redshift migration and receive alerts for any discrepancies or issues. This proactive approach to data management helps organizations maintain data quality throughout the migration process, ensuring that all information is accurate and complete.

Conclusion

The migration from MySQL to Redshift presents numerous advantages, including improved performance, cost-effective storage, and enhanced analytics capabilities. By leveraging Ask On Data, organizations can navigate this transition smoothly and efficiently. The tool’s intuitive interface and powerful features enable users to migrate their data with confidence, unlocking the full potential of their data analytics initiatives.

As businesses continue to rely on data for strategic decision-making, the importance of choosing the right data management solutions cannot be overstated. Embracing tools like Ask On Data for your MySQL to Redshift migration can set the stage for long-term success in an increasingly competitive environment.

0 notes

Text

AWS Analytics Services

AWS Analytics Services offer a comprehensive suite of tools designed to help businesses make data-driven decisions. With services like Amazon Redshift for data warehousing, AWS Glue for data integration, and Amazon QuickSight for business intelligence, organizations can analyze large datasets efficiently. These services provide scalable, secure, and cost-effective solutions that empower users to process, store, and visualize data with ease. AWS Analytics Services support various data formats, including structured and unstructured data, enabling seamless integration and analysis across diverse systems. By leveraging these tools, businesses can uncover valuable insights, improve decision-making processes, and optimize overall performance. AWS ensures reliable data management, analysis, and accessibility in real-time.

Click here to know more: https://operisoft.com/data-analytics/unlocking-the-power-of-aws-data-analytics-services-a-comprehensive-guide/

0 notes

Text

AWS Glue is a fully managed extract, transform, and load (ETL) service designed to make it easier for businesses to prepare and integrate data for analytics, machine learning, and application development.

It simplifies the process of combining and analyzing data from multiple sources by automating common data preparation tasks.

Key Features of AWS Glue for ETL and Data Integration:

Serverless Architecture AWS Glue is serverless, meaning there is no infrastructure to manage.

It automatically provisions the necessary resources, scales as needed, and charges only for the resources used.

ETL Automation AWS Glue provides a graphical interface and automated code generation to simplify creating and managing ETL jobs.

Developers can write their own scripts using Python or Scala, or use the Glue Studio visual interface for a no-code/low-code experience. Data Cataloging The AWS Glue Data Catalog automatically discovers, organizes, and indexes metadata about your data.

This makes it easier to locate, manage, and query datasets across a wide range of AWS and external services.

Integration with Other AWS Services AWS Glue seamlessly integrates with services like Amazon S3, Redshift, RDS, and DynamoDB.

This allows businesses to build comprehensive data pipelines using familiar AWS tools.

Support for Various Data Sources AWS Glue supports structured and semi-structured data sources, such as relational databases, JSON files, and CSV files. It can handle on-premises and cloud-based data seamlessly.

Flexible Job Scheduling Glue allows scheduling ETL jobs at regular intervals or triggering them based on events.

This flexibility ensures that your data pipelines are timely and reliable.

Support for Complex Workflows AWS Glue workflows enable orchestration of multiple ETL jobs and dependencies, helping businesses manage sophisticated data integration pipelines.

Why AWS Glue Matters for Businesses:

AWS Glue empowers businesses to:

Save time by automating data preparation tasks.

Reduce costs with its pay-as-you-go model.

Enable faster decision-making by integrating and preparing data for analytics quickly.

Simplify compliance by organizing data and metadata.